Sign Recognition using Sequential Pattern Trees

Eng-Jon Ong: Personal Page Richard Bowden: Personal PageOverview

This work attempts to research methods that allows one to perform automatic Sign Language Recognition (SLR) from video streams. To this end, novel machine learning models and algorithms are proposed to produce classifiers that can detect and recognise segments in video sequences that contain a meaningful sign.

Motivation

Automated Sign Language Recognition remains a challenging problem to this day. Like spoken languages, sign language feature thousands of signs, sometimes only differing by subtle changes in hand motion, shape or position. This, compounded with differences in signing style and physiology between individuals, makes SLR an intricate challenge.

Method

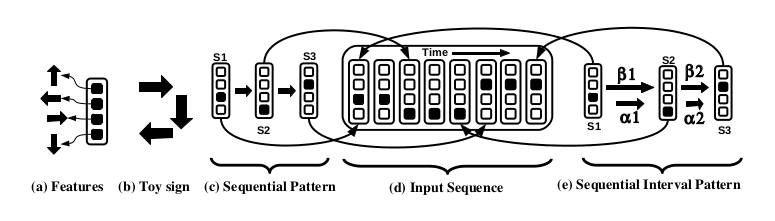

Our approach to automated SLR uses discriminative spatio-temporal

patterns, called Sequential Patterns (SPs). SPs are ordered

sequences of feature subsets that allow for explicit spatio-temporal

feature selection and do not require dynamic time warping for

temporal alignment. We have further improved SPs by integrating

temporal constraints into the pattern, resulting in a Sequential

Interval Pattern (SIP). Detecting SPs and SIPs in an input sequence

is done by finding their representative ordered events (feature

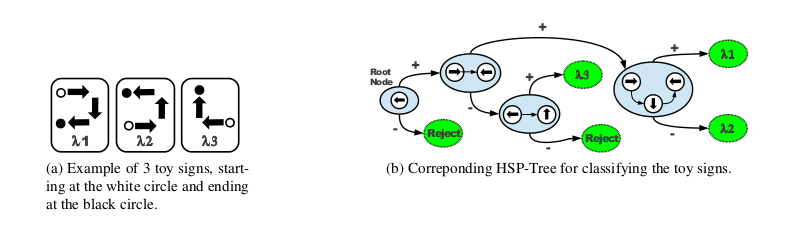

subsets) that satisfy the temporal constraints (for SIPs).  A multi-sign detector and recogniser can be built by

combining a set of SPs or SIPs into a hierarchical structure, called

a Hierarchical Sequential Pattern Tree (HSP-Tree). An example of an

HSP-Tree can be seen below:

A multi-sign detector and recogniser can be built by

combining a set of SPs or SIPs into a hierarchical structure, called

a Hierarchical Sequential Pattern Tree (HSP-Tree). An example of an

HSP-Tree can be seen below:

Results

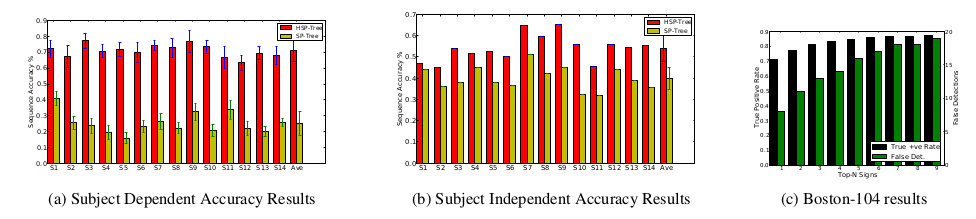

We have evaluated the method on a dataset of continuous sign

sentences, demonstrating that it can cope with co-articulation. The

proposed approach was shown experimentally to yield significantly

higher performance than SP-Trees for unsegmented SLR, in both signer

dependent (49 % improvement) and independent datasets (12%

improvement). Additionally, comparisons with HMMs have shown that

the proposed method either equal or exceed the accuracy of HMMs, in

signer dependent (71% HSP vs 63% HMMs) and signer independent (54%

HSP vs 49% HMMs). This is achieved at a significantly reduced

processing time: (2 minutes HSP vs 20 minutes HMMs for processing

981 signs).

Publications

Eng-Jon Ong, Nicolas Pugeault, Oscar Koller , Richard Bowden Sign Spotting using Hierarchical Sequential Patterns with Temporal Intervals. In IEEE Conference on Computer Vision and Pattern Recognition, IEEE, 2014.

Acknowledgements

This work was part of the EPSRC project “Learning to Recognise

Dynamic Visual Content from Broadcast

Footage“ grant EP/I011811/1.