If you use this dataset or scripts please cite our work

@inproceedings{deepsketch2020,

title = {{Deep Sketch-Based Modeling: Tips and Tricks}},

author = {Yue, Zhong and Yulia, Gryaditskaya and Honggang, Zhang and Yi-Zhe, Song},

booktitle = {Proceedings of International Conference on 3D Vision (3DV)},

year = {2020}

}

ABOUT THE DATASET

Rendering styles

The datset contains naive and stylised sketches for a chair category of the ShapeNetCore dataset. For each chair shape folder it containes two subfolders: "naive" and "stylized", represeting two rendeting styles described below.

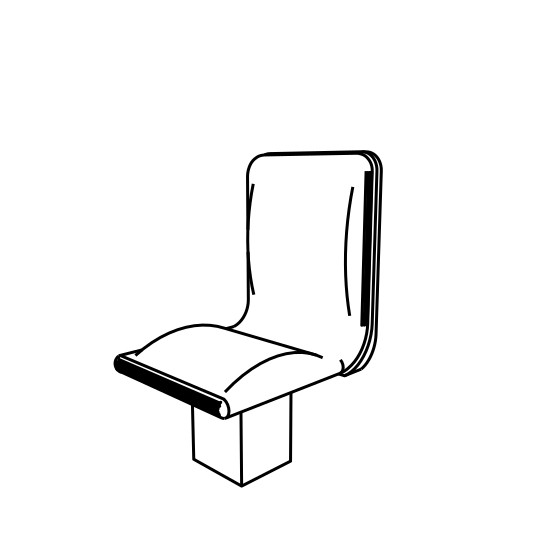

Naive sketch.

Naive sketch denotes synthetic sketch generated from a reference 3D model, using silhouettes and creases rendering,

with a uniform stroke width, which we set to 2.5. We render such 256×256 sketches using Blender Freestyle. Such types of sketches are clean

and perspectively accurate, and thus the reconstruction results on them can achieve higher accuracy. These skecthes are generated using this Blender script.

Naive sketch.

Naive sketch denotes synthetic sketch generated from a reference 3D model, using silhouettes and creases rendering,

with a uniform stroke width, which we set to 2.5. We render such 256×256 sketches using Blender Freestyle. Such types of sketches are clean

and perspectively accurate, and thus the reconstruction results on them can achieve higher accuracy. These skecthes are generated using this Blender script.

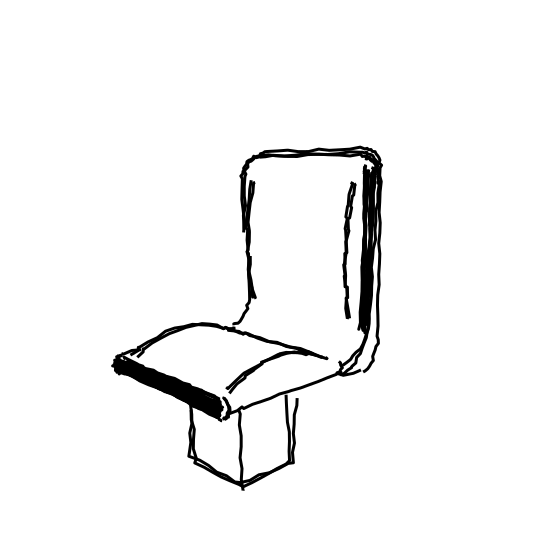

Stylized sketch.

To obtain a stylized dataset we apply a set of random global and local deformations to each stroke of a naive sketch, exploiting the

‘svg disturber’

script from the open-source library. We used the following settings of parameters:

Stylized sketch.

To obtain a stylized dataset we apply a set of random global and local deformations to each stroke of a naive sketch, exploiting the

‘svg disturber’

script from the open-source library. We used the following settings of parameters:

-a -c -n 1.3 -r 2.5 -sl 0.9 -su 1.1 -t 2 -min 1 -max 2 -os 1 -pen 2.5 -penv 1.5 -bg -u

To obtain a sketch in a vector format we use the Blender SVG exporter. The global stroke deformation consists of stroke rotation,

scaling and translation. The rotation angle is randomly sampled from [0°, 2.5°]. We allow stroke global scaling that does not preserve

stroke aspect ratio, where the scale factor is randomly sampled from [0.9, 1.1]. Finally, the translation vector is randomly sampled from

the disk with a 2.5 radius. We enable coherent local noise, where the offset is sampled randomly from [0, 1.3] interval. In addition,

we enable over-sketching, meaning that the stroke under global a local deformation is traced several times. We allow the width of the strokes

to be traced at most two times. We allow the strokes width within one sketch to vary with its width value being randomly sampled from the normal

distribution with mean set to 2.5 and variance equal to 1.5.

Viewpoints

For each style a folder "base" contains 8 base viewpoints, where the camera elevation is set to 10 degree to imitate human perspective in the real world, and azimuth takes values equidistantly sampled from 0 to 360 degree.

For each style a folder "bias" contains 5 additional viewpoints around each of 8 base viewpoints, generated by sampling the camera elevation and azimuth angles from normal distributions with mean matching the elevation and azimuth of one of the base viewpoints. We set variance to 7 degrees. To avoid viewpoints too similar to base viewpoints or viewpoints deviating too much from them, we add the lower and upper thresholds of 5 and 15 degrees, respectively. The distance between a virtual camera and a 3D shape is randomly sampled from the normal distribution with mean set to 1.5 in the range [1.4,1.6]. We use a perspective camera for all viewpoints.

The camera parameters are enocoded into the files names, for instance, azi_39_elev_5_0001.jpg was generated with azimuth of 39° and elevaton of 5°. We haven't saved the distances between a virtual camera and a 3D shape for the sketches in the `bias' folder. The value of this parameter is set to 1.5 for the sketches in the `base' folder.