TotalCapture Dataset

Matthew Trumble, Andrew Gilbert, Charles Malleson, Adrian Hilton, and John Collomosse, Centre for Vision, Speech & Signal Processing University of Surrey, United Kingdom appeared atBritish Machine Vision Conference, BMVC 2017

Introduction

The TotalCapture dataset is designed for 3D pose estimation from markerless multi-camera capture, It is the first dataset to have fully synchronised muli-view video, IMU and Vicon labelling for a large number of frames (∼1.9M), for many subjects, activities and viewpoints. The dataset is publically avaliable, and the source of dataset should be acknowledged in all publications in which it is used as by referencing the following paper : and this web-site

Dataset Link

TotalCapture Dataset Link

Note that the dataset requires registeration, please check the licence information at the bottom of the page

Citation

@inproceedings{Trumble:BMVC:2017,

AUTHOR = "Trumble, Matt and Gilbert, Andrew and Malleson, Charles and Hilton, Adrian and Collomosse, John",

TITLE = "Total Capture: 3D Human Pose Estimation Fusing Video and Inertial Sensors",

BOOKTITLE = "2017 British Machine Vision Conference (BMVC)",

YEAR = "2017",}

Dataset Overview

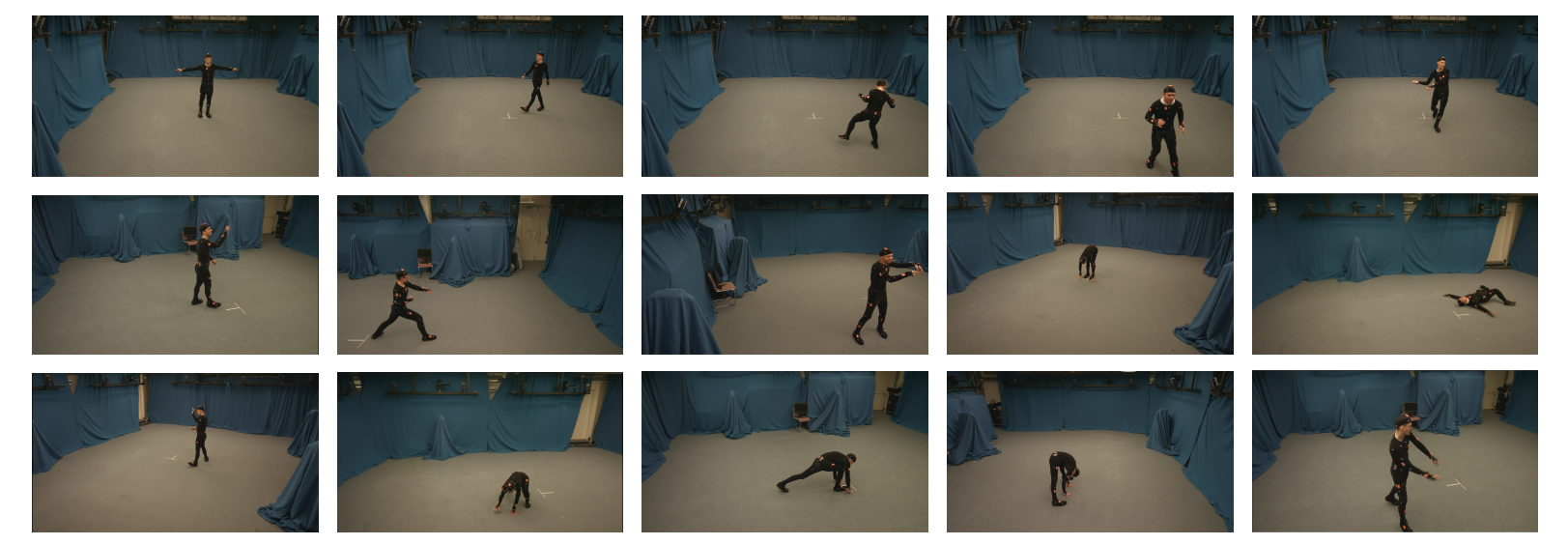

The dataset contains a number of subjects performing varied actions and viewpoints. It was captured indoors in a volume measuring roughly 8x4m with 8 calibrated HD video cameras at 60Hz. There are 4 male and 1 female subjects each performing four diverse performances, repeated 3 times: ROM, Walking, Acting and Freestyle. An example of each performance and subject variation is shown below. There is a total of 1892176 frames of synchronised video, IMU and Vicon data (although some is withheld as test footage for unseen subjects). The variation and body motions contained in particular within the acting and freestyle sequences are very challenging with actions such as yoga, giving directions, bending over and crawling performed in both the train and test data.

License

The datasets are free for research use only.This agreement must be confirmed by a senior representative of your organisation. To access and use this data you agree to the following conditions:

- Multiple view video sets and associated data files will be used for research purposes only.

- The dataset source should be acknowledged in all publications and publicity material which it is used or results derived from the data are used by referencing the relevant publication as indicated for specific datasets and inclusion of the repository link

- The datasets should not be used for commercial purposes.

- The data should not be redistributed.

To request access to the TotalCapture Dataset, or for other queries please contact: